基于pytorch的深度卷积神经网络(CNNs)算法原理的初步学习

第一次diveinto人工智能的黑盒里头,让我看看ML的网络里面到底装了什么~

神经网络的典型处理如下所示:

- 定义可学习参数的网络结构(堆叠各层和层的设计);

- 数据集输入;

- 对输入进行处理(由定义的网络层进行处理),主要体现在网络的前向传播;

- 计算loss ,由Loss层计算;

- 反向传播求梯度;

- 根据梯度改变参数值,最简单的实现方式(SGD)为:

weight = weight - learning_rate * gradient

Sr

Structure

Convolution Layer

using different filters we get a stack of filtered images(with different features extracted).

Padding

避免训练后损失数据/增加边角数据使用率

Convolution operations type:

- Valid: Without paddling: NxN * f*f -> n-f+1 x n-f+1

- Same: paddling

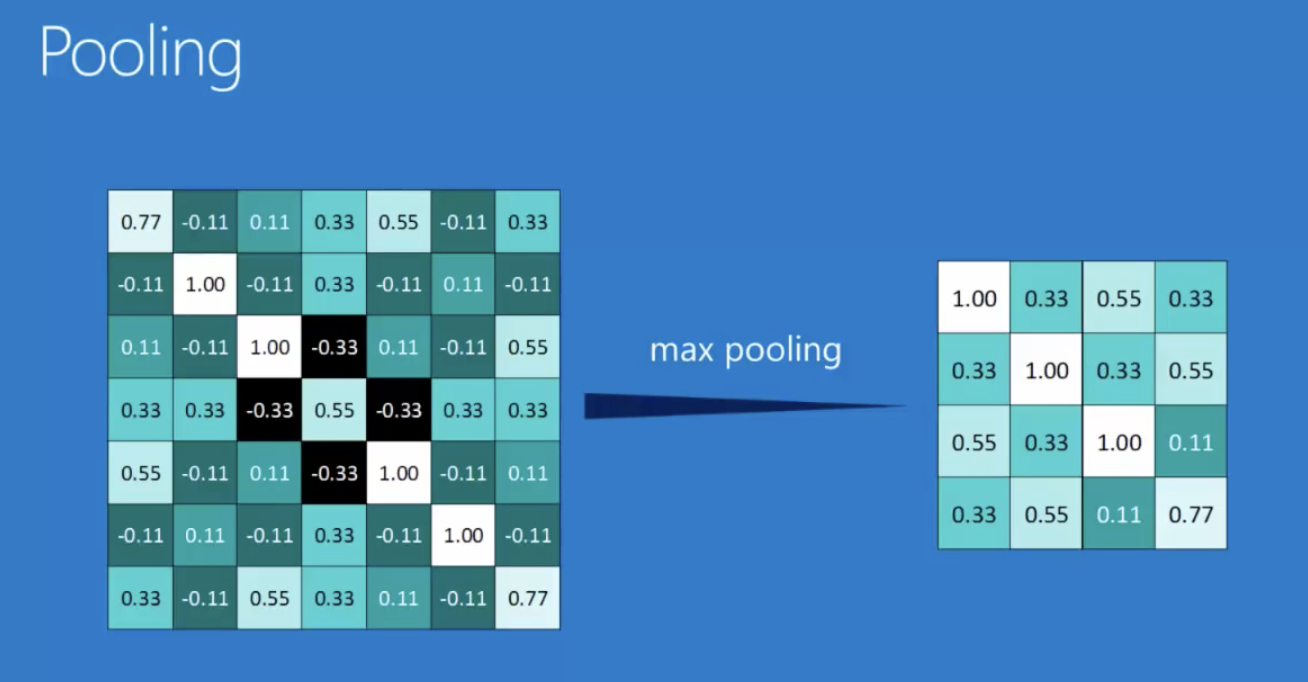

Pooling: Shrinking the image stack

max pooling - similar pattern, but smaller.

- window size

- stride

- walk the window in stride across the image

- record the maximum value.

- less sensitive to loc - for it doesnt care about the exact position where the max value occurs inside the window

Normalization - keep the math inside the border

Keep the math from breaking by tweaking each of the values just a bit.

change everything negative -> zero

** Rectified Linear Units (ReLUs)**

find any negative value, change it to zero.

ReLU layer: stack of imgs become imgs without neg val.

Layer Stacking - output -> input

Deep Stacking

output -> input -> …

ConL(filter) -> PoL(smaller) -> …

Fully Connected layer

every value gets to vote. (This layer can also bedirectly stacked)

e.g.. suppose 4096 input node as 4096x1 vec and 10 output nodes as 10x1 vec. Thus we have a 10x4096 linear transform function.

Backpropogation - Learning.

filter, feature pixels of the conv kernel and voting weights in the FC.

Gradient descent

adjust args and see how error changes.

Hyperparameters(knobs)

- Convolution

Number of features

Size of features- Pooling

Window size- Window stride

Number of neurons- *Architecture: how many of each type of layer?

Hyperparameters OR Parameters?

Parameters:算法参数,ML调整(权重w,偏置b)

Hyperparameters: 模型参数,人为设置调整。

Algorithm

卷积(Convolution)

卷积的本质就是加权积分,g是f的权值函数,表示输入f各个点对输出结果的影响大小,数学定义∑f(x)g(n-x)中的n-x表示x的权值和什么相关,也可以理解为一种约束,可以写成任何关于x的函数,不是一定要写成n-x(比如可以直接写成x,即表示为每个点x独立设置一个权值,x的权值只与自身有关),各个应用领域会根据需求不同改写成不同的权值约束函数。信号领域使用n-x表示x的权值与x到n的距离相关,即输入信号的影响因子与信号的衰减时间有关;图像处理领域每个像素的权值与自身的相对位置有关,所以权值函数g写成矩阵形式,是为了表示每个位置的像素值只能与对应位置的权值相乘,这就是一种基于位置的权值约束,而不是用n-x。

一个对象(吃冰淇凌)对一个系统(体重)的作用效果满足线性原理、累加原理。该对象对这个系统连续作用了一段时间后,求该系统的状态。这个时候,一个卷积就可以求出来了!

ConvNets only capture local “spatial” pattern in data. ( the information is largely stored in the way pixels arrange. So if they were rearranged, information is lost) (data must be made to look like an image).

Rule of thumb: If the data is just as useful after swapping any of the column with each other, then you can’t use Convolutional Neural Networks.

ConvNets are great at finding patterns and using them to calssify images.

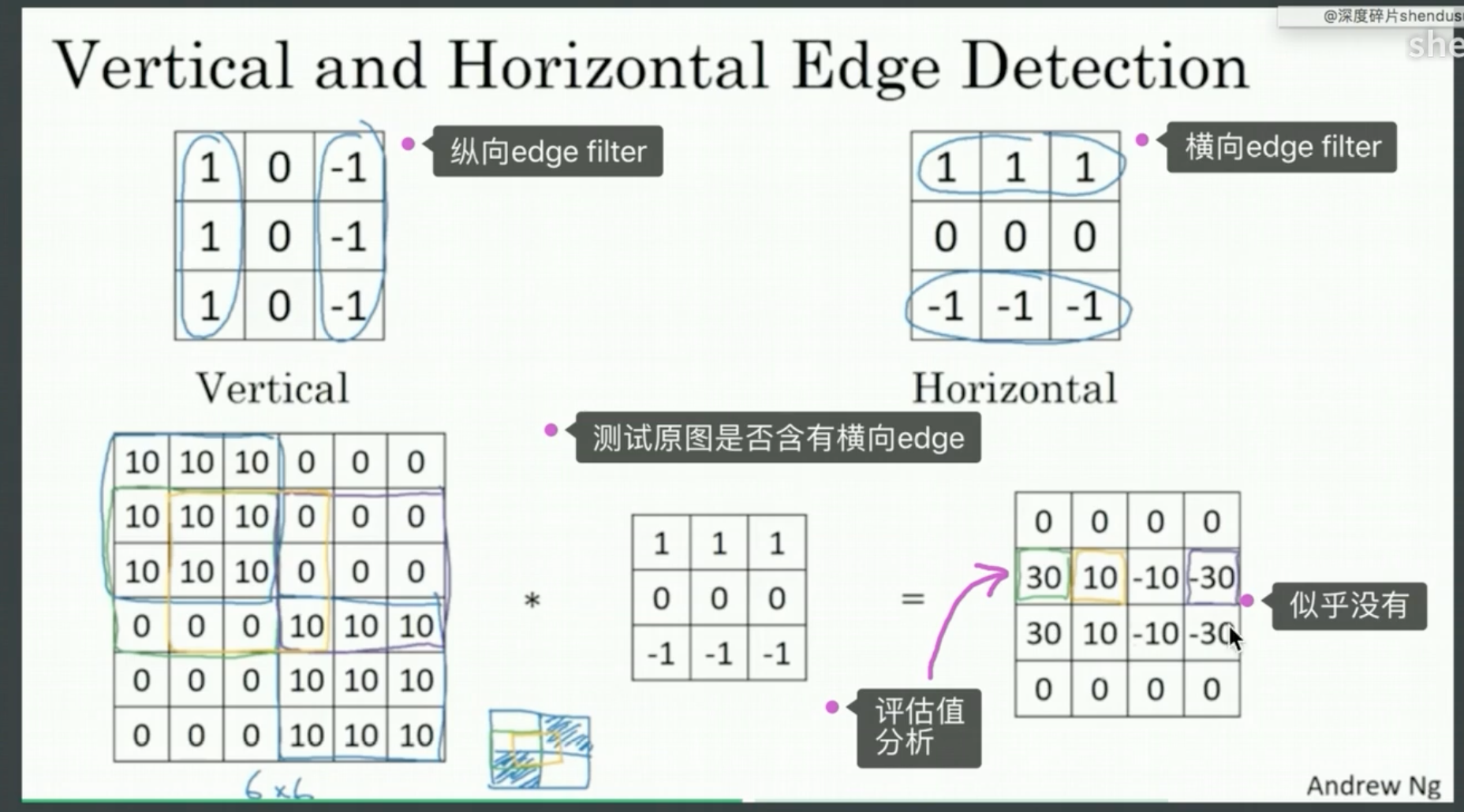

卷积核(filter)

每一个filter根据卷积核内权重对数据进行评估/Transform.

而CNNs模型通过大量的训练不断更新weights,基于训练数据集对filters进行最优化

量身定制:target, loss, gradient descent 迫使filters发生改良,帮助实现loss最小化

BakckPropagation

反向传播算法/BP

Code (Talk is cheap, ______)

```py

#Dependency:

import torch

import torch.nn as nn